An illustrated overview of the Unreal Engine MallocBinned2 allocator

Complete high level overview of the memory mechanisms in the Unreal Engine MallocBinned2 allocator.

No AI was used in the construction of this document, only a lot of plain human butt hours, some curiosity, and permission from my wife.

Introduction:

This is an in-depth study of the MallocBinned2 allocator (or MB2) used in Unreal Engine, the objective of this document is to introduce the most crucial aspects of the allocator, their different configurable knobs and dive deep into its internals.

This content is divided in a series of three posts (third one not available yet, still under construction), this being the first which covers all the internal policies and mechanisms of MB2 and tries to keep references to source code as minimal as possible, it focuses on outlining high level workings of the system as much as possible.

The second post serves as a “tourist map”, it maps concepts mentioned here to actual references to source code, other implementation details and platform specific notes like what OS functions the allocator interacts with.

Finally, the third article is an overview of tooling available to inspect and instrument MB2 including references to my authored plugin MallocBinnedInsights (which is still an early WIP) that will be freely available at github under the MIT license and is being designed to work in tandem with the traces generated for UnrealInsight.

Please consider that all the material outlined here was deduced only by careful inspection of the source code, and local testing, making this work a mere result of interpretation that could be partially incomplete or not capture the real intention of the authors (specially when discussing strategies).

High level description:

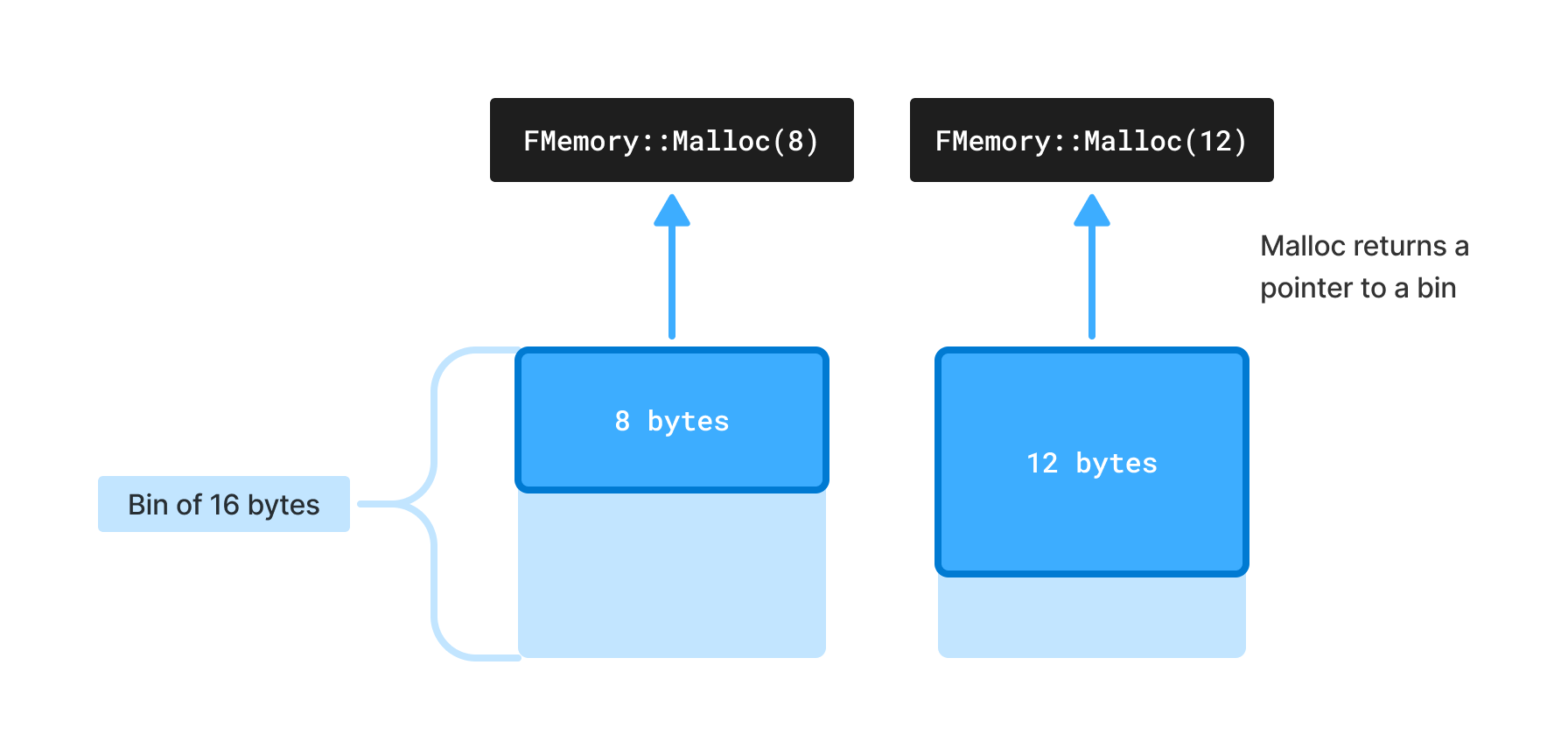

MallocBinned2 or MB2 is the default allocator for client and server builds in Windows and Linux, it fulfills allocation requests using pre-defined fixed sized chunks of memory known as bins, whenever someone requests memory from the allocator, a pointer to the smallest bin that can satisfy the request will be returned.

Internally MB2 allocates bins in pages of 64KiB (An MB2Page) that are naturally aligned, these blocks are then subdivided by one of the supported bin sizes and pointers to these “subdivisions” (a bin) are returned when performing a FMemory::Malloc operation.

MB2Pages (64KiB) are requested from a paged allocator that acts as a cache between the binned allocator and the operating system.

The allocator doesn't faces external fragmentation problems thanks to the previously described mechanisms, however internal fragmentation can be generated when the size of a request doesn't exactly match one of the supported bin sizes.

Thread contention is reduced by a caching mechanism consisting of two levels, L1 being a thread local cache that needs to be activated manually per thread (done mainly for time critical threads), requests that hit this path will be the fastest to be fulfilled, while L2 is a thread shared cache implemented using a lock free algorithm that is friendly to the processor's cache line, this global cache is accessible only to threads that opted in to have a local cache.

Memory in caches can be flushed and possibly returned to the operating system by performing a FMemory::Trim operation, more details will be discussed later.

MB2 aims to be super fast when allocating or freeing memory, with an average time complexity of O(1) and really few assembly instructions when L1 caches are active and hit.

Small allocations vs large allocations

An allocation is considered large if the requested size is bigger than the biggest supported bin size or if requested alignment is bigger than 256, otherwise is considered small and it will be fulfilled using one of the predefined bin sizes listed below (Default values at Unreal Engine 5.6).

L1 and L2 caching mechanisms are only available for small allocations.

On the other hand, large allocations or OS allocations, interact directly with the paged allocator, the OS term comes from the fact that large allocations interact almost directly with the Operating System memory functions (the paged allocator is just a thin layer).

About bin sizes

By design, every supported bin size must be a multiple of 16, so the minimum supported size is 16 bytes, this property is leveraged by different subsystems in the allocator which reinterpret the same bin memory in different ways to store relevant info using data structures no bigger than 16 bytes, for example, bin memory that is owned by one of the caches is interpreted differently than a bin that is available and not owned by any cache, and both representations are 16 byte long for 64 bit systems.

Large allocations bigger than 64GiB are not supported and would cause the application to crash, this limitation is imposed by MB2 using an uint32 to "remember" how many 16 byte blocks does a request had.

// Max_uint32 Bytes Bytes to GiB

2**32 * 16 bytes / 1024 / 1024 / 1024 = 64GiB

Alignment refresher

We say that a number M is aligned to N if M is a multiple of N, in other words M % N == 0, also if M and N are integer numbers and N is restricted to be a positive power of two then M is aligned to N if the first P zeroed bits of N are bitwise equal (zero) to the first P bits of M.

M N

========================

0b100[00] % 0b1[00] = 0 // Aligned

0b101[01] % 0b1[00] = 1 // Not aligned

P P

Alignment in malloc operations

The requested alignment is expected to be a power of two, any value smaller than 16, will be ignored and treated as a request on a boundary of 16 bytes which comes for free in the MB2 as the start address of every bin is a multiple of 16.

void* PtrAlignedTo2 = /* 0x180010 */

FMemory::Malloc(

/* BytesCount */ 8,

/* Alignment */ 2

);

assert((size_t)PtrAlignedTo2 % 0b10 == 0);

void* PtrAlignedTo4 = /* 0x180020 */

FMemory::Malloc(

/* BytesCount */ 8,

/* Alignment */ 4

);

assert((size_t)PtrAlignedTo4 % 0b100 == 0);

void* PtrAlignedTo8 = /* 0x180030 */

FMemory::Malloc(

/* BytesCount */ 8,

/* Alignment */ 8

);

assert((size_t)PtrAlignedTo8 % 0b1000 == 0);

void* PtrAlignedTo16 = /* 0x180040 */

FMemory::Malloc(

/* BytesCount */ 8,

/* Alignment */ 16

);

assert((size_t)PtrAlignedTo16 % 0b10000 == 0);

If the requested alignment is a power of two in the range (16, 256], MB2 will try to promote the allocation to a larger bin whose size matches the originally requested alignment.

In previous example, the size of both requests, 72 bytes and 68 bytes, got aligned to the nearest higher multiple of 128 and 32 respectively, this will satisfy the alignment requirement because for each MB2Page the start address is a multiple of 65536, which is partitioned in N bins of the same size, hence the start address of each bin in a given page will be a multiple of the bin size.

If the updated request cannot be satisfied, the allocation will be considered large, this can happen when the requested size is bigger than the biggest supported bin or when the alignment is bigger than 256 (which can be configured).

Large allocations are forwarded directly to the paged allocator, the start address of these is a multiple of 65536, and are requested in a granularity of 4096 bytes, which causes small-sized allocations with big alignments generate a lot of wasted memory e.g. FMalloc(4, 512) would force MB2 to request 4KiB from which only four bytes are intended to be used, however this is not a common scenario in practice,

Behavior is undefined If the requested alignment for a small allocation is not a power of two, as internal math assumes a proper power of 2, while for large allocations a non power of two causes the application to crash.

Allocator metadata

MB2 owns internal metadata to remember relevant information like the size of a large allocation or the list of free bins that can be used to fulfill future requests.

Before continuing reading any further, please jump into my Friendly MB2 source code reference as we’ll start referring to MB2 components using the real name in the source code.

The metadata is structured as a hash table, where the keys are pointers to locations of managed memory but reinterpreted as unsigned numbers and the values are arrays of FPoolInfo objects, The next diagram briefly illustrates how 7 different keys can be mapped into the hash table.

In this context we’ll refer to managed memory as memory that the allocator uses to fulfill an allocation request, i.e. the memory pointed by the return value of a

FMalloc::Mallocoperation.

The number of buckets in the hash table is platform specific and is calculated based on the amount of installed physical memory and other variables. This metadata is laid out in memory in different contiguous blocks of the same type, which are requested directly from the operating system with an alignment of 65536.

None of the internal MB2 subsystems seem to leverage on the alignment imposed to the metadata memory, this is possibly an optimization oportunity, more research required here.

A FPoolInfo object (a.k.a. pool) is responsible for tracking the availability status of different blocks of managed memory, the data stored in it varies depending on the type of allocation being tracked, for small allocations it holds a linked list of free (and uncached) bins in a given MB2Page (A 64KiB block).

And for large allocations it remembers “how many contiguous 16 byte blocks were requested by the user for a large allocation” and the actual “allocation size requested to the operating system”.

See internal details on how the keys are hashed in my friendly source code reference post.

Metadata and managed memory for small allocations

Small allocations divides the managed memory in units known as bins, the bin memory is interpreted as a FFreeBlock if it’s not owned neither by a MB2 cache nor the client code (code that uses the allocator), or as a FBundleNode if it’s owned by some of the MB2 caches. A more detailed view of the FFreeBlock is discussed next.

A FFreeBlock is a data structure of 16 bytes that behaves like a linear allocator of bins, bins that get taken (allocated) from the free block, cannot be returned back (freed), instead, when MB2 is marking a single bin as free, possibly after the client code performed a FMalloc::Free operation, the deallocated bin will be reinterpreted as a FFreeBlock that contains a single bin. This FFreeBlock will be linked to the FPoolInfo that tracks the MB2Page to which the given bin memory belongs.

The first 16 bytes of any MB2Page are reserved for storing a special FFreeBlock object known as the pool-header, this object is aligned to a MB2Page boundary, is never returned to client code, and tracks the remaining consecutive bins available at the end of a MB2Page.

This way a FPoolInfo object can hold two types of FFreeBlocks, a pool-header block that tracks remaining consecutive free bins or a single-bin block that represents a bin that was previously allocated from the pool-header and then got returned to the pool.

As the first 16 bytes of a MB2Page are reserved for holding the pool-header block, we can assert that none of the pointers to bins returned by a FMemory::Malloc operation will ever be aligned to 65536 (only applies for small allocations).

The FPoolInfo object also tracks how many bins have been taken from the MB2Page, when the taken bins is zero the full page is returned to the PagedAllocator. At the same time a FFreeBlock object tracks the number of consecutive free bins. For single-bin blocks, this number is always one, and for the pool-header block, this number is in the range:

The FFreeBlock provides an AllocateBin() operation that essentially returns the pointer to the next free bin in the block and decreases the “number of consecutive free bins”. For single-bin blocks AllocateBin() it returns a pointer to itself, for pool-header blocks it returns a pointer to the next free bin, these are returned from lower to higher addresses.

If the MB2Page can be partitioned exactly by the bin size we say that the block doesn't have slack memory (Or wasted memory), in that case MB2 consumes the first bin of the block to make it the holder of the pool header info.

If the MB2Page has slack memory, it is interpreted to be at the start of the block and is used to hold the pool header structure and the wasted memory is guaranteed to be a multiple of 16 as both the MB2Page and all of the supported bin sizes are also a multiple of 16 bytes, this way a FFreeBlock (a structure of 16 bytes) can always fit in the wasted area.

The next table outlines the slack memory in a MB2Page for the default supported bin sizes.

| Bin | 64KiB Page partitions | Slack leftover per 64KiB page | First bin is header |

|---|---|---|---|

| 16, | 4096 | 0 | true |

| 32, | 2048 | 0 | true |

| 48, | 1365 | 16 | false |

| 64, | 1024 | 0 | true |

| 80, | 819 | 16 | false |

| 96, | 682 | 64 | false |

| 112, | 585 | 16 | false |

| 128, | 512 | 0 | true |

| 144, | 455 | 16 | false |

| 160, | 409 | 96 | false |

| 176, | 372 | 64 | false |

| 192, | 341 | 64 | false |

| 208, | 315 | 16 | false |

| 256, | 256 | 0 | true |

| 288, | 227 | 160 | false |

| 320, | 204 | 256 | false |

| 384, | 170 | 256 | false |

| 448, | 146 | 128 | false |

| 512, | 128 | 0 | true |

| 560, | 117 | 16 | false |

| 624, | 105 | 16 | false |

| 720, | 91 | 16 | false |

| 816, | 80 | 256 | false |

| 912, | 71 | 784 | false |

| 1024-16, | 65 | 16 | false |

| 1168, | 56 | 128 | false |

| 1392, | 47 | 112 | false |

| 1520, | 43 | 176 | false |

| 1680, | 39 | 16 | false |

| 1872, | 35 | 16 | false |

| 2048-16, | 32 | 512 | false |

| 2256, | 29 | 112 | false |

| 2608, | 25 | 336 | false |

| 2976, | 22 | 64 | false |

| 3264, | 20 | 256 | false |

| 3632, | 20 | 896 | false |

| 4096-16, | 16 | 256 | false |

| 4368, | 15 | 16 | false |

| 4672, | 14 | 128 | false |

| 5040, | 13 | 16 | false |

| 5456, | 12 | 64 | false |

| 5952, | 11 | 64 | false |

| 6544, | 10 | 96 | false |

| 7280, | 9 | 16 | false |

| 8192-16, | 8 | 128 | false |

| 9360, | 7 | 16 | false |

| 10912, | 6 | 64 | false |

| 13104, | 5 | 16 | false |

MB2 binds a given pool to one bin size by keeping an internal table of bin sizes vs pools, each entry in the table is composed of two linked lists, one tracking the active pools (pools that have free bins), and other for exhausted pools.

Metadata and managed memory for large allocations

Large allocation requests are forwarded directly to a paged allocator, the starting address of a large allocation is aligned to 65536, and no metadata is held directly in the internally allocated memory, the first 16 bits (4 less significant hexadecimal digits) of the address are expected to be zero, this is not true for small allocations. A Free or Realloc operation leverages on this knowledge to differentiate if the received pointer points to a small or large allocation.

// Will be large allocation because there's no bin that can

// fulfill this request Memory is requested

// directly from the PagedAllocator

void* BigAllocPtr = FMemory::Malloc(32756);

// The returned pointer is a multiple of 65536 or 0x10000,

// which is only true for large allocations

assert(reinterpret_cast<uint64_t>(BigAllocPtr) % 0x10000 == 0);

// Free the large allocation, returns memory

// directly to the PageAllocator

FMemory::Free(BigAllocPtr);

The size of a large allocation is a multiple of a virtual memory page (defined by the OS), which is usually 4KiB, for example a request of 17852 bytes will be converted internally to 20480 which is the nearest multiple of 4096 bigger than 17852.

The requested size of a large allocation is tracked by the FPoolInfo object owning the initial address of the allocated block (it’s stored as “how many 16 byte units were requested”), which can be any number in the range:

The maximum requestable size is:

The actual size requested to the operating system is tracked in the FirstFreeBlock pointer.

For large allocations the FPoolInfo object doesn’t point directly to the allocated block, however given the start address of a large allocation it is possible to ask the PoolHashTable for the PoolInfo that tracks the allocated memory.

Supported alignments range between 16 and 65536, if alignment is bigger than the MB2Page (65536), the application will crash.

Caching mechanism for small allocations

When a pointer to a bin is freed (via FMalloc::Free()), MB2 will cache the pointer into a local thread cache (FPerThreadFreeBlockLists) that uses a LIFO scheme, this cache holds a table with entries for all supported bin sizes, each table entry is formed by two containers of pointers to bins known as bundles, a partial and full bundle, these caches are activated for a given thread by calling FMemory::SetupTLSCachesOnCurrentThread();.

Each bundle itself is formed by a linked list of bundle nodes, a bundle node reinterprets the bin memory for the cache subsystem to use, this implies that a bundle node object cannot be bigger than 16 bytes (The minimum bin size), additionally each bundle must satisfy two limits (that can be configured), no more than 64 nodes (or pointers to bins) and no more than 65536 bytes of pointed bins memory (or 8192 bytes if aggressive memory saving is active, however this was disabled by default in 2018), if one of the limits is not satisfied the bundle is considered full.

For an empty thread cache (A FFreeBlockList with both partial and full bundles empty) if the partial bundle gets full, then it becomes the full bundle, if the partial bundle gets full again the thread local cache is considered full and nothing can be added until something is removed, in this scenario unreal evicts the full bundle and inserts it into the thread shared cache (or GlobalRecycler), the following group of diagrams illustrates the behavior of a thread local cache.

The thread shared cache or GlobalRecycler, is another table with entries for all supported bin sizes, the table and each entry in this table is aligned to the CPU L1 cache line which is typically 64 bytes on x86_64 architectures, each entry holds 8 pointers to bundles referred to as slots (num of slots can be configured, this is useful to make the entry span a full cache line).

In order to keep the GlobalRecycler lock-free, slots are modified using interlocked instructions; when two threads are adding/removing a bundle into/from the same slot at the same time, one of the threads will succeed and the other will retry in the next slot.

When MB2 tries to push a new bundle into the GlobalRecycler and all slots for the given bin are set, the caching will fail, in this case each bundle node in the failed-to-add bundle gets re-interpreted as a single-bin FFreeBlock that will be linked into the respective list of free blocks in the FPoolInfo owning the address.

If the allocator gets this far it will check if the MB2Page tracked by the FPoolInfo is fully unused in order to flush it into the PagedAllocator.

Caching mechanism for large allocations

Large allocation interact directly with the PagedAllocator, which is just a thin layer between MB2 and the Operating System, memory is not returned directly to the OS, instead the paged allocator caches up to ~64MiB and this memory is used to fulfill subsequent requests (either large allocations or MB2Pages of bins for small allocations) which is convenient to avoid expensive kernel calls.

However, MB2 will bypass the PagedAllocator and forward the request directly to the operating system when the block being freed or allocated is ~16MiB long.

Cache clearing mechanisms and memory trimming

User can request to clear the local thread caches and cached blocks in the paged allocator by performing a FMemory::Trim operation, this will try to free as much memory as possible, and is called by unreal garbage collector system when finishing a purge of UObjects.

GlobalRecycler was not mentioned in the diagram above because the thread shared cache cannot be evicted by a trim operation at the moment of writing this (Unreal 5.6), possibly to amortize the cost of future allocations while reducing cost of a trimming, if you need to “desperately” return memory to the operating system and want to experiment with this, you can add the following code, but proceed with caution.

if (GMallocBinnedPerThreadCaches && bTrimThreadCaches)

{

FMallocBinnedCommonUtils::Trim(*this);

// Add at FMallocBinned2::Trim file MallocBinned2.cpp

// Take the snippet with a grain of salt as there might be additional context that I’m ignoring like frequency of trims.

for (uint8_t PoolIndex = 0; PoolIndex != UE_MB2_SMALL_POOL_COUNT; ++PoolIndex)

{

while (FBundleNode* BundleToRecycle = Private::GGlobalRecycler.PopBundle(PoolIndex))

{

BundleToRecycle->NextBundle = nullptr; // Clear union

Private::FreeBundles(*this, BundleToRecycle, PoolIndexToBinSize(PoolIndex), PoolIndex);

}

}

// More code...

Each thread local cache is locked by the owning thread while the thread is “awake”, thread cache evictions will happen per thread and only during transitions from Awake -> Sleeping (just before FEvent::Wait gets called), during this transition the local cache is unlocked and potentially evicted by Unreal, through a call to FMemory::MarkTLSCachesAsUnusedOnCurrentThread(), the eviction will happen only if a new FMemory::Trim request happened previously, otherwise the cache will be preserved, meaning that Trim operations are asynchronous and will complete differently per a given thread depending on when they go to sleep.

In the previous scenario thread 0 was not the first one to evict their cache even though it started the trim operation, also cache in thread 3 didn’t get evicted when it was already sleeping, instead it happened later after thread woke up and went to sleep again, this occurs because eviction caused by a trim will only be effective during the transition Awake -> Sleeping of a thread.

For non desktop platforms (iOS and Android), a thread initiating a trim operation can also flush other thread caches if they are currently sleeping (however Android uses MB3 by default instead of MB2), this is skipped on desktop platforms (Windows, Linux and Mac) as these platforms tend to have a higher thread/processor count that makes the operation slower.

Important threads like the GameThread, RenderingThread, AudioThread, IoDispatcher, etc,

activate and deactivate the thread local cache by calling FMemory::SetupTLSCachesOnCurrentThread() and FMemory::ClearAndDisableTLSCachesOnCurrentThread() respectively, the clear operation will evict the local cache even though a trim was not initiated previously.

Memory guards or canaries

MB2 identifies when a memory corruption happens (memory got modified unexpectedly), by marking memory that should not be accessed by external systems with bit patterns known as guards or canaries (as canaries used in coal mines to detect mortal gases), these marks are dependent on context and get applied to PoolInfo and FreeBlock objects as follows.

PoolInfo Canaries | FPoolInfo:: ECanary:: Unassigned (0x3941)

Set when the pool is not tracking neither a MB2Page of bins for small allocations nor a large allocation, this is expected in three cases:

- When a given hash bucket initializes an array of pools

- For small allocations, after freeing the last taken bin of a pool

- After freeing a large allocation.

PoolInfo Canaries | FPoolInfo:: ECanary:: FirstFreeBlockIsOSAllocSize (0x17ea)

Set when the pool is tracking a large allocation, i.e. an allocation size greater than the biggest supported bin size or whose alignment is greater than 256.

PoolInfo Canaries | FPoolInfo:: ECanary:: FirstFreeBlockIsPtr (0xf317)

Set when the pool is tracking a MB2Page (64KiB) of a given bin size.

If the guards are not transitioned as described in the diagram above then the application will crash informing that a memory corruption happened, these transitions and canaries are checked in different moments for a given FPoolInfo:

- When you allocate a bin from the

MB2Pagebeing tracked by a givenFPoolInfo(small alloc). - When a bin is evicted from one of the caches and gets linked back into the

FPoolInfo:: FirstFreeBlocklist (small free). - When you perform a large allocation.

- When you free a large allocation.

FFreeBlock Canary | EBlockCanary:: Value (0xe3)

This canary is set when a MB2Page of bins is just created (Set for the pool-header FFreeBlock), or when a bin is evicted from a cache and gets linked back into the pool owning it.

FreeBlocks also have other two canaries that are useful for server processes being forked on linux, pre-fork and post-fork, the value is used to avoid freeing memory in pages shared with the parent process, but it will not be covered here.

The bit pattern is checked for the pool-header FFreeBlock whenever a FMemory::Free() operation is requested, and for the FPoolInfo::FirstFreeBlock (only the first element in the linked list) when another bin gets evicted from one of the caches and is returned into the FPoolInfo owning the bin address.

The following snippets outline some common corruption scenarios and how MB2 handles them, take special attention on how most of these are delayed when GMallocBinnedPerThreadCaches is 1, this is because the canaries are checked for FFreeBlock and FPoolInfo objects and not for FBundleNodes.

// Double free will crash the application if GMallocBinnedPerThreadCaches is 0,

// otherwise the thread cache would store the same pointer twice, and two

// different allocations could be fulfilled using the same pointer (memory gets stomped)

void* Pointer = FMemory::Malloc(8);

FMemory::Free(Pointer);

FMemory::Free(Pointer);

// An allocation that happened before

void* SomeAllocation = FMemory::Malloc(8);

// Modified the value pointed after the pointer got freed

auto* Pointer = static_cast<uint8_t*>(FMemory::Malloc(8));

FMemory::Free(Pointer);

*Pointer = 20;

// if GMallocBinnedPerThreadCaches is 0

// Canary check for Pointer will be performed here, however won't crash

// because the FFreeBlock canary has an offset of 24 bits and we're

// modifying the first 8 bits.

FMemory::Free(SomeAllocation);

void* SomeAllocation = FMemory::Malloc(8);

auto* Pointer = static_cast<uint32_t*>(FMemory::Malloc(8));

FMemory::Free(Pointer);

*Pointer = 0x12000000;

// Canary check for Pointer will be performed here, and it will crash

// as the bits 24 to 32 (which the FFreeBLock uses to hold the canary)

// were modified

// This only happens consistently if GMallocBinnedPerThreadCaches is 0,

// otherwise the check would be delayed until the Pointer gets evicted from the caches.

FMemory::Free(SomeAllocation);

This shows how the default guarding mechanism provided by MB2 is not reliable under a bunch of scenarios, and can be easily bypassed depending on the order in which Free/Allocs happen, detection of a corruption is delayed by the caching subsystems, possibly generating errors that are never detected, which is aggravated by the fact that a cache can hold the same pointer multiple times, meaning that multiple allocation requests can be fulfilled with the same pointer (stomping the memory), these and other potential issues are handled by decorators that wrap the FMalloc object and add extra proctection mechanisms (won't be covered here), for an example see the StompAllocator created by P. Zurita, which is just a proxy that uses operating system functions to protect freed memory blocks from being accessed, this allows to debug unexpected accesses and other corruptions as they occur, instead of randomly after 100 other operations have happened.

Conclusion

We’ve exhaustively overviewed crucial aspects of the MB2 allocator, this included memory and metadata internal representation, caching strategies and a very brief introduction to corruption protection mechanisms, however memory is a massive topic and a lot is still uncovered, more pertinent being the forking mechanism used in linux servers, Unreal handling during low memory workloads and specific details on thread safety but those are hopefuly more accessible now after getting the big picture outlined here.

If you want to map this knowledge to actual source code and experiment with different available knobs please check my second post, if you want to analyze, optimize or profile memory usage, wait for my third post which will cover in detail the existent instrumentation tooling.

Finally, it is relevant to mention that MallocBinned3 is a newer version of the allocator, which only supports 64 bit systems and handles memory in non-fixed-sized pages, starting from 4KiB.

Thanks for reading!

Credits

Written by Romualdo Villalobos